OpenSIPS is one of the most widely adopted open-source session initiation protocol (SIP) servers in the world, used by hundreds of companies to route billions of calls across the cloud every day. It’s a multi-functional, multi-purpose signaling Session Internet Protocol (SIP) server used by carriers, telecoms, application developers, and ITSPs.

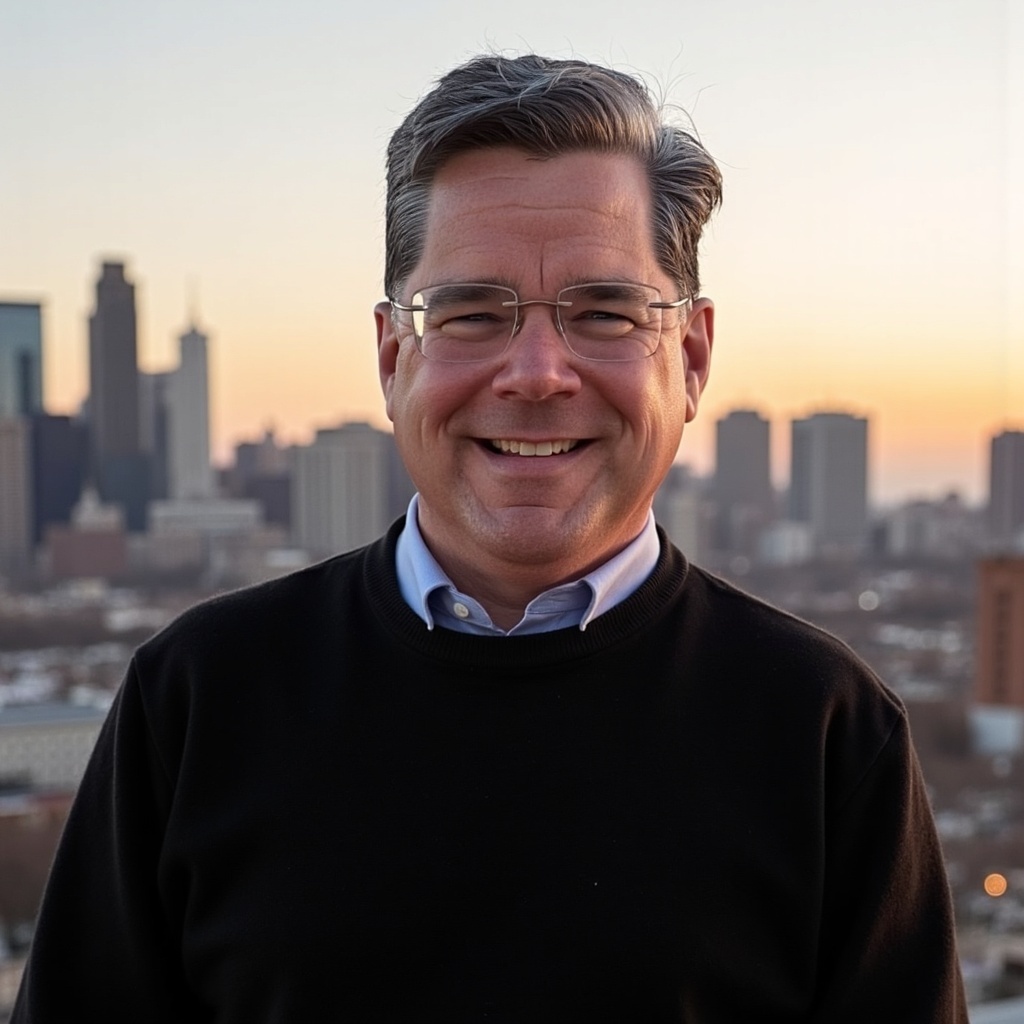

Michael Tindall, Commio’s Chief Architect & Co-Founder, would be the first to tell you that he’s learned a lot over the years as OpenSIPS and the cloud communications (CPaaS) industry have grown and matured. Like most things in life, it’s our mistakes that deliver the best lessons. Watch as Tindall, a veteran OpenSIPs engineer, shares what he learned from three of his biggest cloud VOIP platform development mistakes during his time at Bandwidth and Commio:

1. Two critical dependencies that will break your system

2. The coding mistake that will slow down your call routing

3. The #1 thing most OpenSIPS developers ignore to their peril

OpenSIPS powers commercial and residential voice platforms, trunking, wholesale, enterprise, and virtual PBX solutions, session border controllers (SBCs), front-end load balancers, IMS and Contact Center platforms, delivering:

1. High throughput: Tens of thousands of calls per second (CPS) + millions of simultaneous calls

2. Flexibility of routing and integration: Routing script for implementing custom routing logics via APIs

3. Effective application coding: 120+ modules provide SIP handling, backend operations, application integration + routing logic

Tim McLain: Good afternoon, everybody and welcome to Commio’s webinar, My 3 Biggest OpenSIPS Mistakes and What They Taught Me. My name is Tim McLain. I’m the Director of Marketing. Thank you for taking some time to be with us today, along with Mike Tindall, our cofounder and Chief Architect. Mike leads our product development and our engineering team as our Chief Architect. And as I mentioned, he’s Commio’s cofounder (originally thinQ), and Mike it’s hard to believe it’s been just over 10 years now, right, since you and Aaron Leon started the company, right?

Michael Tindall: That’s right, 10 short years. When Tim approached me with the idea of doing a series of OpenSIPS webinars relative to things we’ve learned over the past 10 years, so I put together a list of things that stuck out to me personally, and that’s what’s going to be covered today. But absolutely would love feedback post-webinar if this was applicable to you, if you found it interesting, or if it helped you in any way, or if there are other topics that you might like to see us cover in the space for future webinars. Email tmclain@commio.com with your ideas, please.

Mistake #1: External Dependencies

So the first thing is external dependencies, we’re talking about things that can bite you. OpenSIPS by and large is a synchronous processing application. Yes, they have an async module. It’s useful for certain things that aren’t necessarily needed in an immediate call flow setup. But really, for the purpose of processing initial invites and calls, you want those packets going as quickly through those threads and the children as quickly as possible to prevent things like pile ups or 100% thread utilization.

Some of the services that are external dependencies that you may be dealing with that can affect these… these children are these threads as they’re going through are seeing if you’re doing Caller ID, LRN or Name Lookup, which typically you might be using a rest call for. So, rest is an area LRN, which could either be an email lookup, or it could be a database ID on your end. Or it could be… there’s a number of ways that you could query an external source for LRN.

Any sort of database connections that you may have, whether that be for processing, let’s say, for example, a rate lookup, or if you’re looking for a particular subscriber location, or if you’ve built some sort of custom feature that you’re using internally that’s connected to pick your database name, it doesn’t really matter. If something happens with that database, OpenSIPS is going to sit there until the timeout and will cause a pile-up.

Same thing with DNS. I alluded to that with LRN, but a lot of folks route to providers and custom using DNS, I’ve seen instances and we saw one just as frequently as 2 Fridays ago where CloudFlare, one of the largest DNS providers in the world, had a pretty large event for around an hour that affected a bunch of… a lot of the locations and services on the internet. I imagine a lot of web services were not immune to this. So, these are a couple of areas that if you’re not thinking about, or if you’ve got integrated and you haven’t been experienced an issue, you know, these are some areas you might want to consider looking into how you’ve integrated them, you know? You may never totally get away from external dependencies, depending on your application design. But understanding what they are, what they’re doing and how to mitigate the impact of potential third-party service failure is imperative.

Some areas where OpenSIPS does a good job and have had integrations for a while, things like with DNS, the DNS cache module. If that’s something you’re not using, you should consider. That will allow you the ability to locally cache lookups. It also has a built-in blacklisting feature that will prevent you from looking up known failed destinations, because the last thing you want is a bunch of calls going through either a carrier DNS record or if it’s like, for example, an origination service and you’re sending it to your end customer, and they’ve provided a DNS record for you, the last thing you want is to have a failed record that you’re sitting there waiting to timeout on. A lot of the timeout values within OpenSIPS, yeah, they are tunable. However, I’ve discovered, you know, they don’t, they’re nowhere near the precision, they need to have to fail quickly, gracefully. Typically, you’re in the, you know, minimum of like 1 second kind of timeout range. And that’s just if you’re processing large volumes of packets, that’s 1 second’s to wait too long.

So a couple of ways to mitigate that, I mentioned the DNS cache module that’s already part of OpenSIPS, and has been since at least 1.11, maybe sooner. They’ve introduced new SQL caching engines in later versions that will allow you to cache either entire SQL tables, or specific rows or results that will help you mitigate, you know, some of the issues around database connectivity or for example, maintenance windows, if you have to do a maintenance window. One of the things that we’ve done and there are more than… there’s always more than one way to solve a problem. But the way we’ve solved some of these problems to make them as generic as we could, meaning, if I want to operate a mixed version OpenSIPS environment, maybe… maybe I’m doing a rolling upgrade, and maybe I’m going from 2.45 testing out the latest 3.1 LTS. But, you know, there are nuances and differences between script configs because they obviously improve things and add things. So, something that’s available in 3.1 may not have been available in 2.45, or even a 1.x branch.

What we’ve done is create a generic proxy layer in between our service switches and our external services. So, that enables us to not only proxy and control our own rest timeouts and other types of timeouts relative to DNS, but also allows us to have a central cache repo, so I don’t have to cache everything on every single system. It’s handled by this proxy cluster. So, that could be an option for you, especially when you’re dealing with… when you hit the wall of hitting a tunable timeout in whatever module you may be using. But for example, it’s not a millisecond… you know, a millisecond-level-precision timeout, it’s a second-level-precision timeout, which, you know, any kind of call volume that’s going to… that’s going to crash and burn.

You know, there’s a saying in aviation, you know, first missions, there’s a lot going on in the cockpit, you got lots of information flying at you. But first the mission is to fly the plane. So, before you do anything else, keep the thing in the air. So, what you can do when you’ve got these sort of external dependencies is what makes it nice for us with the proxy caching layer, is if we detect a service issue with a third-party service or even one of our own services that we consume internally, you can create if/else logic blocks around that portion of your OpenSIPS code, and then cache locally the detected status of that particular block.

So, let’s say for example, I’m doing a CNAM markup inline, I get the initial invites processing through the main route block, I’ve called my CNAM block. “Uh-oh, you know, API endpoint’s down or something’s going on, it’s not performing.” Now, your proxy layer can detect that and just set, for example, something as simple as a mem cache key value that you check 1 or 0. I like binary, you know, so, “Oh, you know, CNAM is 0.” You know, if 0, else, you know, skip it, and just fly right past it. That way, you don’t waste time in your system creating a potential bottleneck that could create a pile-up behind you.

We’ve got instances where I don’t even care if we make money on the call, the goal is just to get it through. We care about it over time, but if it’s… if it’s a short-term issue or something like that, or if it’s an issue that’s just detected, we’ll detect it, skip the block, and then in an else block, you know, raise… raise an event or raise a logging alert that will be picked up, say for example, by Prometheus or something like that, and then call to action, the people that need to take a look at that particular service, but maintaining call continuity and service continuity for the customers. So, there’s a couple of points there.

Mistake #2: Do Work Post-Call Setup & Cache, Cache, Cache

Alright, do as much work post-call setup as possible. What I mean by that, and this is one of the big mistakes people make, they try to do too much in the call setup process. There are things that depending on your application can wait a few milliseconds or seconds or even longer, that doesn’t have to happen the second that you’re trying to process that initial invite. So, the first part of is cache as many of those things that you need to set up calls that locally, cache as many of those things as you can. A couple of examples would be account information. And that could mean a lot of different things. It could be account IDs, balance information, any kind of telemetry or metadata about the account that you care about. Maybe you’re stuffing stuff… stuffing things in your CER, maybe you’re doing whatever you’re doing with it. Cache as much of that… as that as you can. And only cache things that are relevant to the call flow.

You know, let’s say for example, you’re working with an account document. And let’s say it’s a JSON document. Well, the account document may have some things relevant to the call… call flow, but chances are, it’s also got a bunch of other junk in there that you don’t need, like billing address, or zip code or whatever. These are things that may be used later by other systems for that account document. But you don’t need to really adjust and cache flows because the switch doesn’t really need them. So, using your memory efficiently allows you to cache more things. Account information, LERG data, for sure, if you’re determining jurisdiction, LRN data to the extent that that’s possible, then you know, at least keep a hot cache of active LRNs.

Known route set. If you’ve already pre-calculated a route set for a given destination for a customer, there’s no reason… and let’s say for example, you’re using some form of SQL Postgres, whatever you’re using to figure that out, there’s no reason that you have to continually recalculate that unless something changes. So, cache… you know, cache as many routes as you can. Same thing for blocked routes, we have a route blocking capability in our product that allows customers to, once they detect an issue, go ahead and put a block in for either, you know, a lot of CN, rate setter state, there’s a very… MPA and exact, whatever. If you’re doing something similar, cache those as well. There’s no reason to hit the DB every time you have to do that.

If you’re blocking bad ANIs, you know, ANIs that don’t generate any revenue or known or you flagged them or they’ve come back with from some sort of reputation engine that says this is 100% bad, like this is a known bad ANI, like cache that stuff. And do those things before you get into the real nitty gritty in the heavy lifting, or what I would call the slowest part of your script. So, you know, the theme here is cache, cache, cache… cache. And some of the things that can help you figure out where… (and I highly recommend this) where you can figure out what the slowest parts of your script are using… is using a benchmark module. And then having an external service, like Prometheus or Zabbix, or whatever you may be collecting with, you know, pull that data periodically so you can put it into your management dashboards and visualize how that portion of your script performs throughout your day.

We all have, you know, high period… high-utilization periods and low-utilization periods. Understanding how these things behave over the course of your day, your 24-hour clock is important. And one of the things we noticed was like, “Wow, why is the latency super high like in the middle of the night? What’s going on with this?” And we’re digging around, it’s like, “Well, okay, the cache is cold. Nobody’s making calls. So, whenever call comes in, checks, cache, cache isn’t there, it’s got to go to the source.” So, those are things that you can look at the help tune it. You may want to have an auto cache or, you know, you build a script that just precedes your cache for you. We do that in many cases with things like CPS, etc. We’ve set it somewhere centrally and then an application run… it’s running all the time, just says, “Okay, I see something new,” and then it distributes it to all the various cache repos. We use Memcached very heavy… heavily. Some people like Redis. There’s a lot of documentation and reference to Redis in the OpenSIPS doc… module documentation. These are just 2 examples. There are plenty more, but Memcached is pretty reliable. And we use that a lot.

Mistake #3: Backup Data Pipeline

The next mistake is not having a backup data pipeline, or as many as you think you need, depending on how vital these pipelines are to your business. Processing calls reliably… reliably is great and it keeps the customers happy because, you know, things are humming right along.

But how do you get paid? How do you keep the lights on? You know, what happens if something happens and you’ve disconnected and you’re missing CDRs or some system locks or you’ve got a, you know, trigger or something configured incorrectly that’s causing, you know, records to skip off the surface? You know how to close the books accurately at the end of the month?

As tech people, and I know the mix of people we have on this call, but I’m a tech person and I can tell you, in the first early years of the business, how many hours I spent at the end of the month running manual reports and looking at what finance gave me in terms of their spreadsheets and what they said the billings should be, versus what the actual billings were. And trying to trace these things down when you’re dealing with billions and billions of records, it was like pulling my fingernails out. There’s literally almost anything I’d rather be doing than, you know, trying to find, you know, some piece of data in a massive mountain of data. Things like audit… auditing vendor bills at the end of the month. I’m betting most people, you know, take a look at that to make sure you’re being billed correctly.

And then also on the other side of that token, I mean, depending on the service you’re providing, your customer could come to you and say, “Hey, I don’t understand this. My record says this. Your records say that. Prove it.” You know, these are things like rating calls, inserting CDRs, calculating balances, detecting fraud. These are all things that can go on your data pipeline, but you can… you should have at least 2 copies of. And the reason is, you know, from a finance perspective and accounting perspective, accountants always like to prove numbers from 2 different… from 2 different points. So, point A shows that, “Okay, I can get to it, their number matches point B. Okay, I consider that number good.” And that’s just a minimum.

But this also goes back into the previous slide that I was talking about, do as many things post call, or what I said… when I say post call, what I mean by that is post the processing of the initial invite as you can. I go back to things like rating the call, inserting CDR into the DB, calculating the total cost or total retail, whatever you may be doing. You may be doing this in call setup. These are things that you should definitely not do. Move those to a post invite process. And, you know, have… have a way to have these… this information distributed to multiple sources. So, you know, the answer to solving this question isn’t Brent Spiner, its data. Data is the key to solving these questions.

One of the things I love about OpenSIPS, my… one of my favorite modules is the RabbitMQ module. It’s reliable. It disconnects gracefully. It won’t lock your threads up like, you know, MySQL or somebody locks up or something, you’re just… you’re kind of stuck. You know, Rabbit, you can do things like run shovel locally, and, you know, check it up to centralize queue or centralized queues, cluster queues later. But you can do a lot of things with this process. So, the ACC module already has an integration with the event module. So, it’ll throw an event for you as long as you define the mod params when you’re setting it… setting it up for events. And you can put whatever ACPs that you’re collecting in there, and it’ll… we’ll put it into… I recommend doing a fan out queue. The reason is, I can publish 1 message, I can have however many queues I want with how ever many consumers connected to those for redundancy purposes.

And then we do a lot of things post… post initial invites. So, I take that… that event out of the queue, and let’s say I have 3 consumers running on 3 different queues. You know, 1 of them is most likely going to be the master, and then it’ll have a bunch of subordinates that are waiting in case the main or the primary of the master fails, you know, the subordinates will sort of wake up and do the work. They’re always doing the work. But typically, if they’re in subordinate mode, it just sort of processes the message and throws it to the Bitbucket. But if it’s like, “Oh, it’s my turn to do something,” then it wakes up and says, “Okay, I’m actually performing the work now, not just going through the motions.”

But you may want to have 2 primaries. And primary A would say go to data store A, and primary for queue B will go to data store B. Now you have 2 different data stores holding… yes, it’s a duplication of data, but again, we’re looking for precision here, especially when it comes to money. And you don’t have to keep these things in your secondary store forever. That can be a backup store that you maybe rotate partitions out of if it’s a SQL type database, or that you set TTLs on if you’re using any kind of key value store. But the point is, you want to keep that data around long enough to where you can cure potential gaps in your CDR coverage. It’s like you come into work the next day and you get a report and it says, “Wait a second, you know, we only billed half of what we normally bill. Why was that? Oh, no, one of our switches decided… decided I didn’t want to report the CDR,” or whatever, this is just an example. You can actually go to your data source B, and the data would be there and say, “Okay, we can pull part of the data from A and fill in the gaps from B,” as an example.

Another way to do this without the complexities of what I just described with Rabbit is, yes, if you want to keep your direct sequel or MySQL hook up, you can do that. So, just running the regular ACC insert. But I also recommend adding, at least even if you don’t do the fan-out, just one direct queue where you throw these events into and have some sort of consumer written in the language of your choice during the post-processing, adding things together, manipulating the data, basically doing your data transformation before it deposits it in its final resting place.

Those are the main… the main things that I thought… that I’ve run into that were sort of interesting problems that definitely caused some pain points, and that we had to solve. And I sort of illustrated how we’ve gone about solving some of those things. And I’m hoping that this resonates with you, with some of you. If any of you have had similar experiences, we’d love to hear from you. If this helped you, we’d love to hear from you. Also, if there are other things that you’d like to see us cover or talk about in the future, happy to put these together. You know, doing this for a long time, it’s sort of easy to pull things out of memory and put together a discussion board for this. So, something I like to do always love to hear other people’s experiences of like, “Oh, man, that happened to me too. We solved a different way,” or, “Oh man, I’m still dealing with that,” or a lot of people that haven’t dealt with these things kind of panicking a little bit saying, “Uh-oh, am I at risk?” So, love having this discussion.

Michael: And I think right now, we’ve got some time for questions. The first question we got here was, what… what was the decision that kind of went into picking OpenSIPS kind of as the basis for… for the platform? As opposed to Kamailio or…

It was familiarity and relationship-based. So, when I was at Bandwidth, we were using something called OpenSIR. And it’s like 1.2 or 1.3, I think. By the time I left, it was 1.5. And then, you know, they split into 2 camps and already had a relationship with Bogdan and most of his team, and it was really just a personal preference from a relationship perspective at that… at that point. You know, if I was coming into it cold turkey, would have made a different decision? Maybe, but that’s just the reason we chose that.

Jason says, “Love all your points about caching and waiting to process stuff that doesn’t have to be done in real-time. Anything else you wanted to add to that?”

What I mean by that is, you know, is it mission-critical for you to have an exact cost on a call that second for the toll? You know, so for example, when the ACC module decides to fire… fire an insert, let’s say in a MySQL, that happens when the call ends, what happens if you’ve got a trigger or something that you’re using or maybe even a roll-up stored procedure or something that’s… that’s hosed and it prevents that from going in? We’ve had similar issues like that in previous designs, and this is just something that we engineered around for those reasons. It was like, “Look, we don’t want to wait. We don’t want a third-party dependency for this particular operation. Let’s just throw it into this big bucket and move on.”

Mark: “We’re seeing chatter around STIR SHAKEN, you know, have we implemented this? And what’s our initial impressions on this when it comes to how that will interact with OpenSIPS?”

We’re actively developing against this. And we’re looking at a lot of different options. So LTS 3.1 just came out. You know, I laughed because LTS is I view as a malleable designation, especially when you’re dealing with open source software, just because they say it’s LTS doesn’t… doesn’t mean it’s ready. It’s one of the features that they’ve got within there. You know, I’ve got my own personal opinions about how this is going to ‘roll out’. And I’m saying ‘roll out’, like with air quotes, because I think there’s going to be some hiccups with it for a variety of reasons. But, you know, June 30, 2021, it’s coming.

I personally think that we may see something similar to what we did with Verizon SMS surcharges, where they set the dates in and then somebody with a lot more clout than us comes up and says, “Hey, we missed something,” and then they punted. Not banking on that, but I’m guessing you have been in the telecom industry as long as I have. If it gets delivered on time, I’ll be very surprised. But nevertheless, we’re operating under the auspices that it’s going to happen, and we’re developing against it for our own internal solution. We’re also evaluating some of the larger providers and what they’re doing, and kind of making that build by decision. Because at the end of the day, if it’s you need to be compliant by X, you know, and we’re still mid development, I need to have a backup plan.

Dan asks, “What are your thoughts, Mike, on data consistency across data centers, you know, things like subscriber data availability outside of a cache?”

That’s a unique problem. That’s not unique to us. I mean, let me say that a different way. If you’re dealing with registrations or you’re handling that in any kind of way for your authentication, I can see… I can understand that question. We don’t actually do where support registration. I’m not tracking individual subscriber location data for the purpose of like say for example, tracking with their handset is or if they’ve got, like, you know, 3 of them, and you’re trying to locate them all, that kind of thing. Data consistency across data centers has always been a challenge. Even with… even across cloud. It really depends on the data… database technology that you’re using. You know, we’ve found… we’ve tried a variety of things from galera clusters to traditional MySQL master slave setups to migrating to Maria DB and doing a similar setup to integrating other databases that have integrated data distribution like Couchbase. It’s kind of idiot-proof, you just point it at another cluster and hit XDCR and go. That works if you’re using JSON documents. But data consistency is always been an issue.

One of the things we do for large volumes of data, large volumes of data, we store it locally in the same data center. We replicate it later, off hours. It’s actually constantly happening. And that’s… that’s for DR purposes, but really what you want are the macro level data of what you’re looking for. So, we all know that the highest volume of data we generate or is from the ACC module. And sadly, most of that are what they call missed calls, which stinks because we still have to do the work. We don’t get paid for those calls because they didn’t connect. And it piles up. So, I call that like a tier 2 data source because you really don’t need it for anything other than troubleshooting, you’re not billing it, you’re not doing those things. But on your completed calls, you’ve got a lot of macro level data you care about. Like, do you care about the individual cost of the call? Sure, you do per call. But when it comes to bill time, do you care? No. We care about how many interstate calls do I have? How many interstates? How many internationals?

And what we do is create aggregate level billing data records that are much smaller, but have periodic time periods. Like say for example, 15-minute periods or even hourly or daily periods that we can easily ship around because that’s the data we’re going to use for billing and other functions like credit detection and those types of things.

Got another good one here. Galera clusters. How did that work out for you in the past when we’ve used these, he said it locked out cluster members to maintain data consistency, which turned out to be not great. I’m more concerned about the data being available now. And eventually an eventual consistency later.

Galera was introduced very early on. And, you know, ran into those same issues kicking members out, data inconsistency across cluster members. We actually ended up migrating everything away from that to more of a fan out master slave. So, one, you know, read, write, master and then read-only. Anything that we do that just is just a read-only. We talked of, write, you know, using like a proxy SQL or HA proxy, whatever you’re using, you know, just fanning those across, and plus however many you want for reads and then electing right masters at different locations in the network, is… has worked a lot better.